In my last article, we implemented a memory cache to cache Angular SSR routes using the memory-cache package which we used to cache Angular universal rendered routes in the NodeJS backend.

But there is a problem here.

We want to scale our application to be able to serve more users. And when we talk about scaling that means that we need that cache to be shared by all application instances.

I will not be talking about different kinds of cache et why the cache is key for scaling, but if you want to learn more about it, here is a good article.

Redis cache?

One of the best caching solutions is Redis.

As described on their website:

Redis is an open-source, in-memory data store used by millions of developers as a database, cache, streaming engine, and message broker.

And what are we? we are developers so I’ll join the community and grow it to be millions and one developer (i know bad joke 😅).

Anyway.

We’ll try to update the previous example used in my last article to use Redis instead of using memory-cache.

Requirements?

We'll need a Redis instance, so I'll install it using Docker

When docker is installed then we'll download the Redis image and create a container, execute the following commands

docker pull redis

docker images

docker run -it --name redis0 -d redis

Redis is up and running on the default port 6379.

Now we'll install the Redis NodeJs Package.

npm install redis

The code

Now let's implement the Redis cache in our server.ts.

We'll create a function getCacheProvider will create a Redis client object that we can use for caching

// Create a redis client object, we'll name it cacheManager

export function getCacheProvider() {

const cacheManager = createClient({

// Redis connection string, username: default, password: redispw

// and it's running on localhost on port 6379

url: "redis://default:redispw@localhost:6379",

// We are disabling Redis offline queue, if Redis is down

// we'll throw an error that we can handle to continue executing

// our application, otherwise redis we'll keep try to reconnect

// and our application will not be responsive

disableOfflineQueue: true,

// Wait 5 seconds before trying to reconnect if Redis

// instance goes down

socket: {

reconnectStrategy() {

console.log('Redis: reconnecting ', new Date().toJSON());

return 5000;

}

}

})

.on('ready', () => console.log('Redis: ready', new Date().toJSON()))

.on('error', err => console.error('Redis: error', err, new Date().toJSON()));

// connect to the Redis instance

cacheManager.connect().then(it => {

console.log('Redis Client Connected')

}).catch(error => {

console.error("Redis couldn't connect", error);

})

}

Now that we have our cache provider, we can use it for caching requests instead of our old friend cache-manager.

export function app(): express.Express {

// ...

const cacheProvider = getCacheProvider();

server.get('*', (req, res, next) => {

console.log(`Looking for route in cache: ` + req.originalUrl);

cacheProvider.get(req.originalUrl).then(cachedHtml => {

if (cachedHtml) {

res.send(cachedHtml);

} else { next(); }

}).catch(error => {

console.error(error);

next();

});

},

// Angular SSR rendering

(req, res) => {

res.render(indexHtml,

{ req, providers: [

{provide: APP_BASE_HREF, useValue: req.baseUrl}

]},

(err, html) => {

// Cache the rendered `html` for this request url

cacheProvider.set(req.originalUrl, html, 'EX', 300)

.catch(err => console.log('Could not cache request', err));

res.send(html);

});

});

// ...

}

Insights

Now let's run the application and check if our data is cached in Redis.

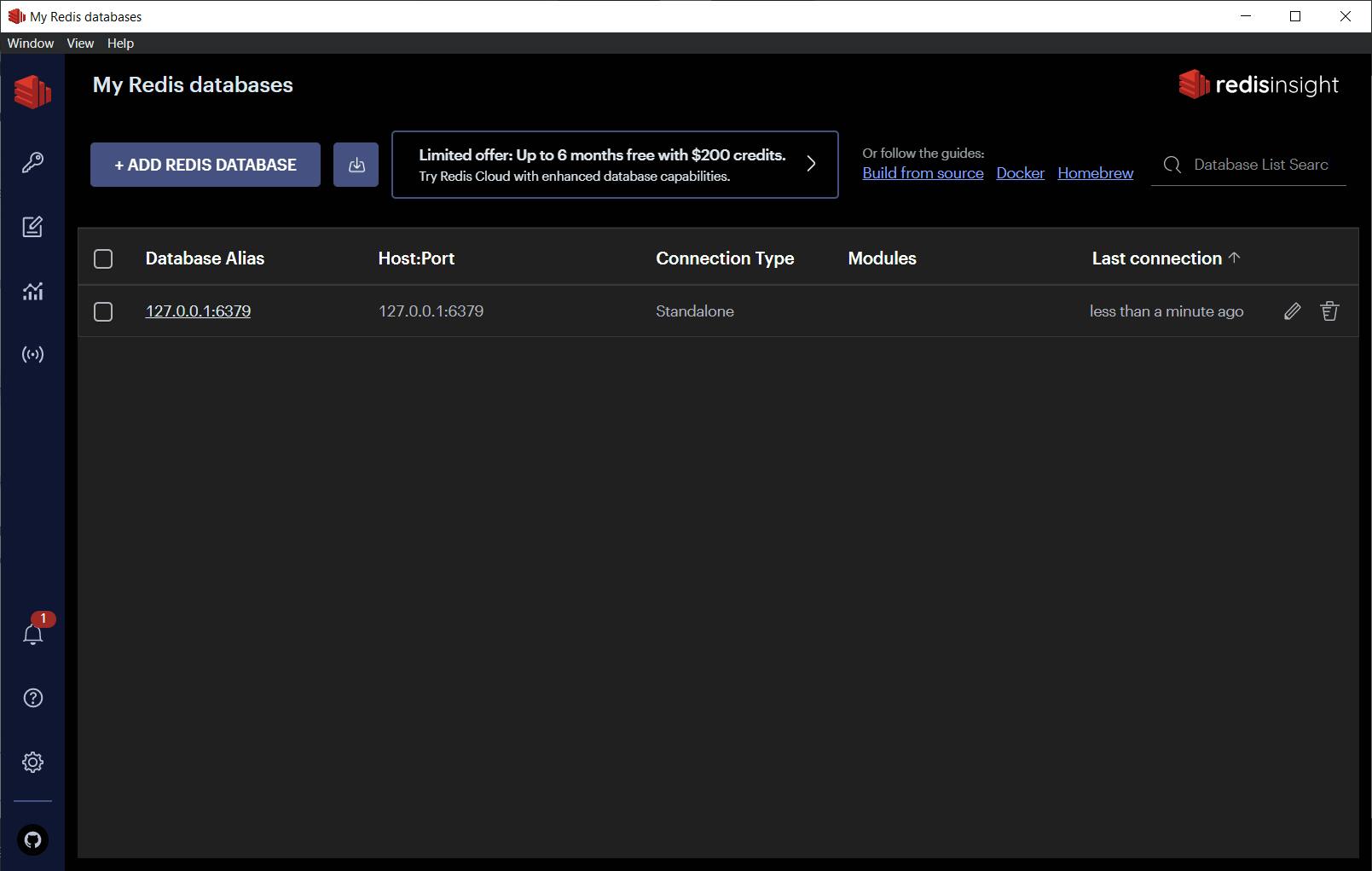

For that, we'll use a tool by Redis called RedisInsights

RedisInsight provides an intuitive and efficient UI for Redis and Redis Stack and supports CLI interaction in a fully-featured desktop UI client

The tool is easy to use and we'll give us a GUI to see what we have in our cache.

This is the main user interface where we can see our Redis instances

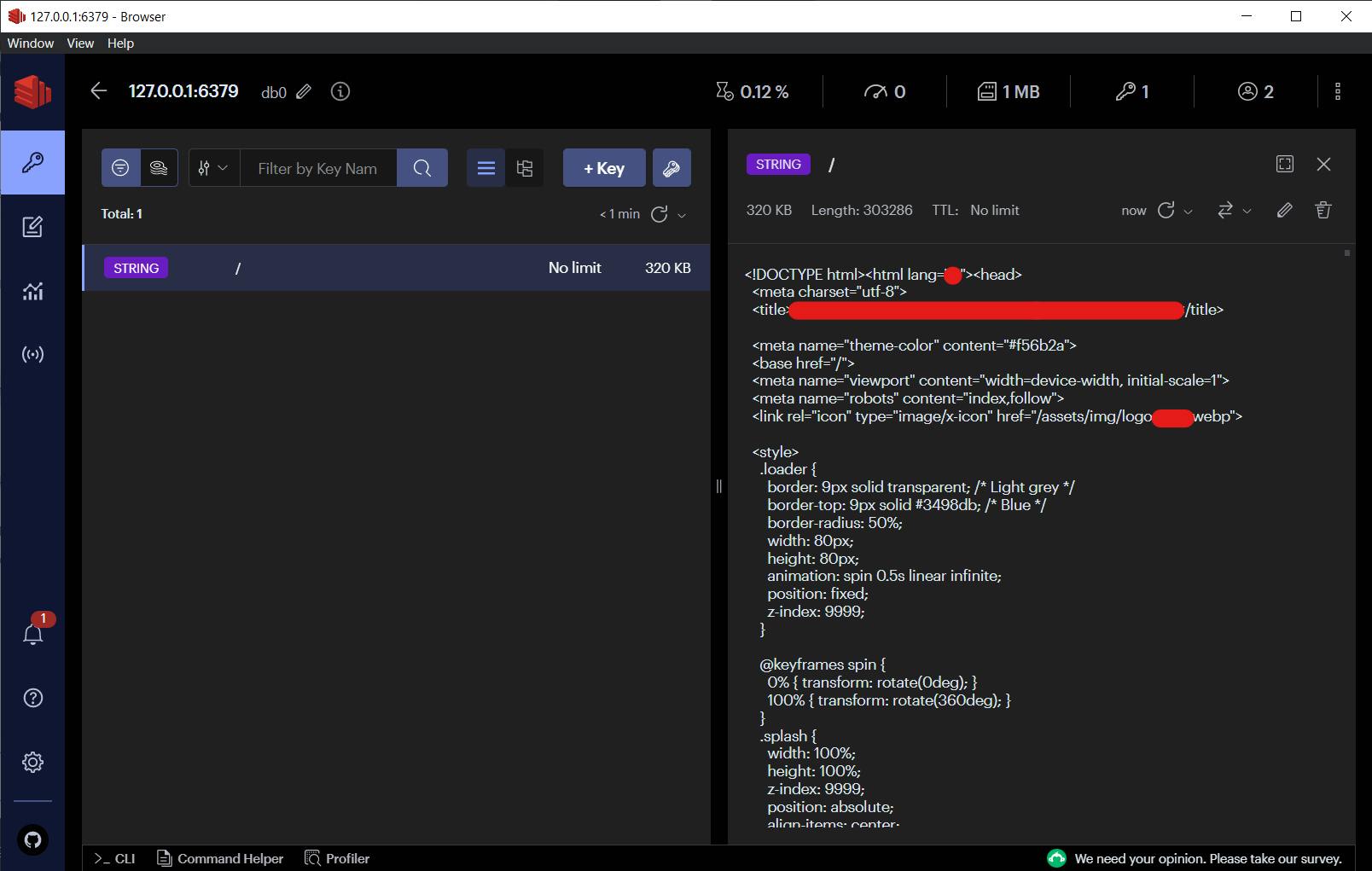

And here we have the cache explorer.

We can see that our cache contains one key "/" that contains the cached homepage.

Hope this helps someone.

Feel free to comment if you have questions or recommendations.

Keep coding everyone.